Guarantee ROI for your NVIDIA DGX™ deployment with Lambda’s deep learning expertise

Accelerate development with purpose-built software for ML/AI

All DGX systems come with the DGX software stack, including AI frameworks, scripts, and pre-trained models. It also comes with cluster management, network/storage acceleration libraries, and an optimized OS.

Maximize uptime with first-party support by NVIDIA

Get your team up and running quickly with NVIDIA’s onboarding programs and comprehensive hardware, software, and ML support customized to your organization.

Scale efficiently by leveraging Lambda’s expertise in deep learning

Build tailored MLOps infrastructure for your company with consulting from Lambda engineers on machine learning frameworks, training platforms, as well as compute hardware, power, networking, and storage.

Bring infrastructure online faster with less expense

We will install and deploy your machines onsite, or you can use Lambda Colocation to also save on operating expenses.

SOLUTIONS

NVIDIA DGX™ compute solutions

As your organization and compute workloads grow, Lambda’s deep learning engineers can provide guidance and support on choosing the right compute solutions tailored to your applications and requirements.

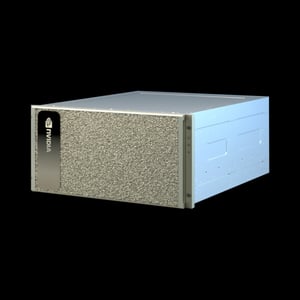

NVIDIA DGX™ H100

The fourth generation of the world's most advanced AI system, providing maximum performance.

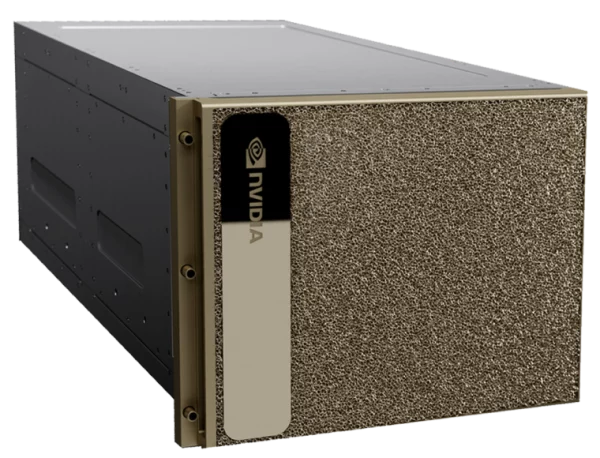

NVIDIA DGX A100™

The third generation of the world’s most advanced AI system, unifying all AI workloads.

NVIDIA DGX SuperPOD™

Full-cycle, industry-leading infrastructure for the fastest path to AI innovation at scale.

COLOCATION

Your servers. Our datacenter.

Lambda Colocation makes it easy to deploy and scale your machine learning infrastructure. We'll manage racking, networking, power, cooling, hardware failures, and physical security. Your servers will run in a Tier 3 data center with state-of-the-art cooling that's designed for GPUs. You'll get remote access to your servers, just like a public cloud.

Fast support

If hardware fails, our on-premise data center engineers can quickly debug and replace parts.

Optimal performance

Our state-of-the-art cooling keeps your GPUs cool to maximize performance and longevity.

High availability

Our Tier 3 data center has redundant power and cooling to ensure your servers stay online.

No network set up

We handle all network configuration and provide you with remote access to your servers.

10,000+ research teams trust Lambda

DGX H100

COMING SOON

NVIDIA DGX™ H100

Up to 6x training speed with next-gen NVIDIA H100 Tensor Core GPUs based on the Hopper architecture.*

- 8U server with 8 x NVIDIA H100 Tensor Core GPUs

- 1.5x the inter-GPU bandwidth

- 2x the networking bandwidth

- Up to 30x higher inference performance**

*MoE Switch-XXL (395B Params), pending verification

**Inference on Megatron 530B parameter model chatbot for input sequence length=128, output sequence length =20 |32 A100 HDR IB network vs 16 H100 NDR IB network